Core Architecture

Agentfield's distributed architecture with control plane and independent agent nodes

Core Architecture

Distributed control plane with independent agent nodes—infrastructure for autonomous software

Agentfield's architecture is designed around a simple principle: separate the boring infrastructure from the intelligent code.

The control plane handles orchestration, state management, identity, and observability—the infrastructure concerns that every production system needs. Agent nodes contain your business logic and AI—the code that makes decisions and takes actions.

This separation enables what monolithic frameworks can't: independent deployment, automatic coordination, and production-grade reliability.

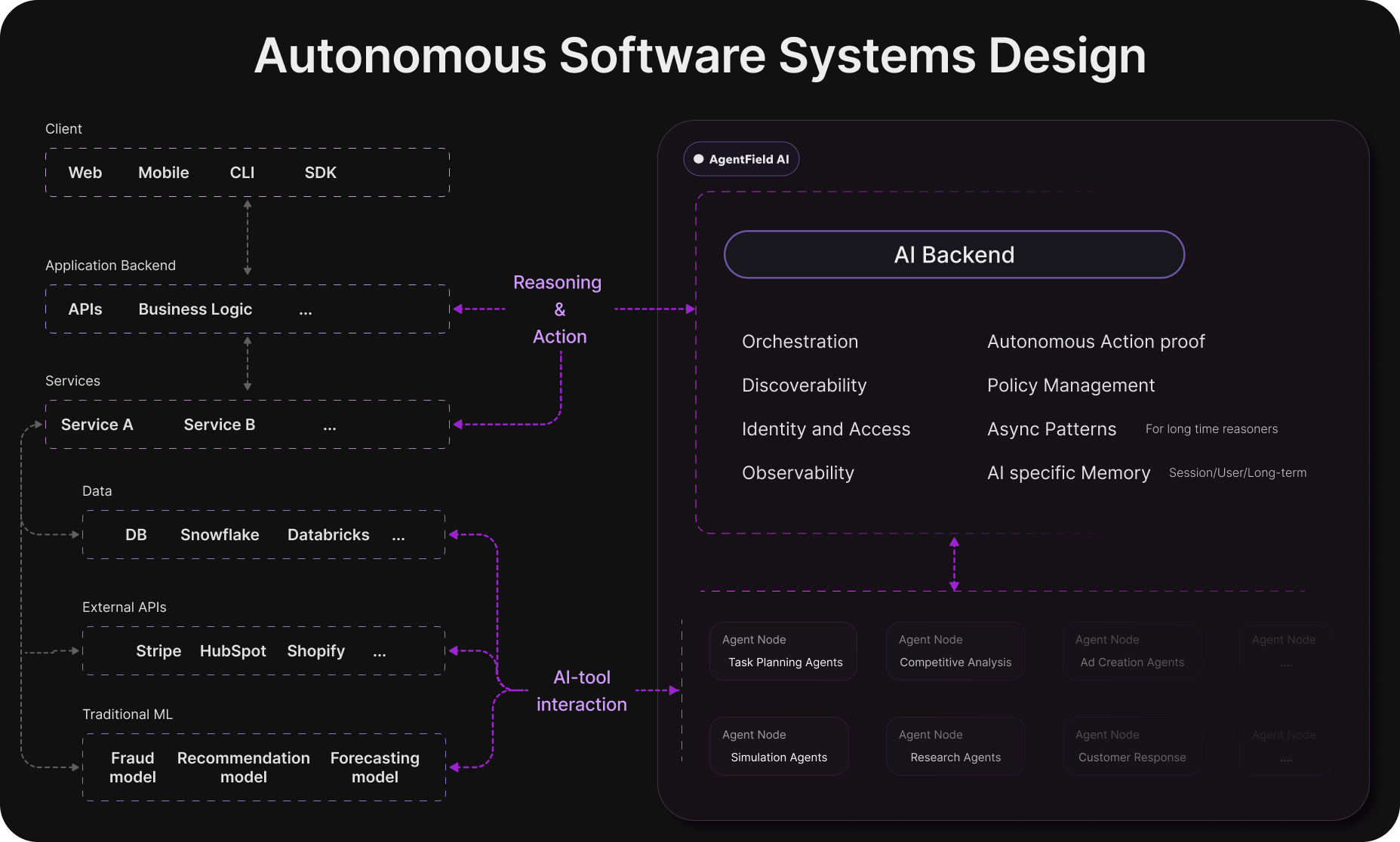

Autonomous Software Systems Design

Your existing software stack—clients, APIs, services, databases, and external integrations—connects to Agentfield's AI Backend through reasoning and action. The AI Backend handles the infrastructure layer (orchestration, identity, observability, async patterns, memory) while Agent Nodes execute your business logic: research, analysis, customer response, and more.

The Two-Layer Design

Control Plane: The Infrastructure Layer

Written in Go for performance and horizontal scalability. Stateless services that can scale independently. This is the "boring" layer—and boring is exactly what you want for infrastructure.

What it handles:

- Request routing and service discovery

- Workflow tracking and execution context

- Memory management and synchronization

- Digital identity (DIDs) and verifiable credentials (VCs)

- Durable queues with backpressure controls

- Webhook delivery and retries

Why Go:

- Native concurrency for high-throughput request handling

- Minimal memory footprint for cost-efficient scaling

- Compile-time safety for infrastructure code

- Battle-tested in production infrastructure (Kubernetes, Docker)

Agent Nodes: Where Intelligence Lives

Written in Python (your code) using the Agentfield SDK. Each node is an independent service that can be deployed, scaled, and updated without affecting others.

What you write:

from agentfield import Agent

app = Agent("customer-support")

@app.reasoner()

async def triage_ticket(ticket: dict) -> Decision:

# Your AI logic here

decision = await app.ai(...)

# Call other agents if needed

sentiment = await app.call("sentiment-agent.analyze", ...)

# Update shared memory

await app.memory.set("ticket_status", "triaged")

return decisionWhat the SDK handles:

- Communication with control plane

- Execution context propagation

- Memory operations

- Identity management

- Health checks and metrics

How a Request Flows

Agentfield supports two execution modes designed for different use cases. Understanding both reveals how the architecture enables scalability, observability, and event-driven patterns.

Why agent coordination through the control plane matters: Notice how Agent1 calls Agent2 via app.call(), which routes through the control plane—not direct HTTP between agents. This architectural choice enables scalability patterns that direct agent-to-agent communication can't achieve: automatic load balancing across agent instances, complete observability of the execution DAG, circuit breaking when agents fail, and context propagation without manual correlation IDs. The control plane isn't just separating infrastructure from logic—it's the coordination hub that makes distributed agent systems production-ready.

Synchronous Execution (Fast Response)

For real-time workflows where you need immediate results:

POST /api/v1/execute/support-agent.triage_ticket

{

"input": {"ticket_id": 12345, "message": "I can't log in"}

}

# Returns immediately with result (typical: 500ms - 30s)

Response: 200 OK

{

"execution_id": "exec_abc123",

"status": "completed",

"result": {"priority": "high", "escalate": true},

"duration_ms": 1247

}What happens:

- Control plane generates

execution_id, persists to database before execution - Routes request to agent node with context headers (

X-Run-ID,X-Execution-ID,X-Session-ID) - Agent executes your Python code with automatic context

- Control plane updates database with result, returns to client

Perfect for: User-facing APIs, chat interfaces, interactive workflows

Asynchronous Execution (Scalable Processing)

For long-running tasks, batch processing, or event-driven architectures:

POST /api/v1/execute/async/analytics-agent.process_dataset

{

"input": {"dataset_id": "large_dataset"},

"webhook": {

"url": "https://your-app.com/webhooks/analytics",

"secret": "your_webhook_secret"

}

}

# Returns immediately (< 50ms)

Response: 202 Accepted

{

"execution_id": "exec_xyz789",

"status": "queued",

"webhook_registered": true

}What happens:

- Control plane persists execution with status

queued - Returns immediately to client (HTTP 202 Accepted)

- Background worker pool picks up the job from queue

- Agent executes asynchronously (can run for minutes/hours)

- Webhook fires when complete with signed payload

Perfect for: Data processing, ML inference, scheduled jobs, microservices integration

Learn more: Async Execution & Webhooks →

The Architecture Benefits You Get

Async-First Design

Sync mode blocks until complete. Async mode queues and returns instantly. Same control plane, same agents—just different endpoints. Use /execute for immediate results, /execute/async for background jobs. Learn more →

Audit Trail by Default

Execution records are persisted BEFORE execution. If your agent crashes, times out, or the server restarts, you have a complete record. No silent failures, no lost requests.

Context Propagates Automatically

When agent A calls agent B, context headers flow automatically. The control plane builds the execution DAG without configuration. You get distributed tracing for free. Learn more →

Horizontal Scalability

The Go control plane is stateless. Scale it horizontally behind a load balancer. Agent nodes scale independently—deploy 1 instance or 100. Each service scales based on its needs.

What Your Agent Code Sees

Both execution modes deliver the same developer experience:

from agentfield import Agent

app = Agent("support-agent")

@app.reasoner() # Works in both sync and async mode

async def triage_ticket(ticket: dict) -> Decision:

# Context automatically available from headers

# No need to pass execution_id, session_id manually

# Memory scoped to session (user), actor (team), workflow

history = await app.memory.get("customer_history")

# Call other agents - context propagates automatically

sentiment = await app.call("sentiment-agent.analyze",

text=ticket["message"])

# AI reasoning

decision = await app.ai(

system="You're a support triage expert",

user=f"History: {history}\nSentiment: {sentiment}",

schema=Decision

)

# Observability notes (tied to execution_id automatically)

app.note(f"Triaged as {decision.priority}")

return decisionKey insight: You write the same code whether client calls sync or async. The control plane handles execution mode, queuing, webhooks, and DAG construction.

When to Use Each Mode

| Pattern | Mode | Why |

|---|---|---|

| Chat bot responses | Sync | User expects immediate reply |

| API endpoints | Sync | Client needs result in same request |

| Data pipeline stages | Async | Long-running, can retry |

| Scheduled jobs | Async | No client waiting |

| Webhook integrations | Async | Event-driven architecture |

| ML model inference (fast) | Sync | Results in milliseconds |

| ML model training | Async | Takes minutes/hours |

Production patterns: See Async Execution guide →

What's Tracked Automatically

Every execution (sync or async) captures:

- Timing: Start, end, duration

- Identity: Agent, reasoner, session, actor

- Lineage: Parent-child relationships (execution DAG)

- Payload: Input/output (configurable retention)

- Events: Notes, errors, status transitions

- Webhooks: Delivery status and retries

The control plane manages this infrastructure. You focus on agent logic.

Key Components

Deployment Topologies

Local Development

All-in-one for fast iteration:

# Build the agentfield binary (from control-plane directory)

./build-single-binary.sh

# Run from an agent package directory (requires agentfield.yaml)

agentfield dev # Run agent in dev mode

agentfield run my-agent # Your agent nodeThe dev command requires an agentfield.yaml config file and main.py in your agent package directory.

Everything on localhost. Hot reload enabled. Logs stream to terminal.

Docker Compose

Isolated services for testing:

services:

af-server:

image: agentfield/control-plane:latest

ports: ["8080:8080"]

my-agent:

build: . # Path to your agent directory (with Dockerfile, main.py, requirements.txt)

environment:

- AGENTFIELD_SERVER=http://af-server:8080Make sure your agent code reads the AGENTFIELD_SERVER environment variable instead of hardcoding localhost:

app = Agent(

node_id="my-agent",

agentfield_server=os.getenv("AGENTFIELD_SERVER", "http://localhost:8080"),

)Multiple agent nodes, shared control plane. Perfect for CI/CD.

Kubernetes

Production-grade orchestration:

# Control Plane Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: agentfield-control-plane

spec:

replicas: 3

# ... horizontal scaling, health checks, etc.

---

# Agent Node Deployment (independent)

apiVersion: apps/v1

kind: Deployment

metadata:

name: customer-support-agent

spec:

replicas: 2

# ... scales independently of other agentsKey advantages:

- Control plane scales horizontally (stateless Go services)

- Each agent node scales independently

- Teams deploy their agents without affecting others

- Standard K8s patterns (services, ingress, autoscaling)

Cloud Platforms

Deploy to Railway, Render, Fly.io, AWS, GCP, Azure—anywhere that runs containers.

Control plane needs:

- PostgreSQL database (for workflow tracking and queue)

- Exposed HTTP endpoint

Agent nodes need:

- Control plane URL (environment variable)

- Their own dependencies only

Why This Architecture Matters

For Individual Developers

Write agents like FastAPI services (familiar patterns). No infrastructure setup (control plane handles it). Debug with real workflow DAGs (not print statements).

For Engineering Teams

Independent deployment (marketing ≠ support ≠ analytics). Shared memory fabric (zero-config state sharing). Observable by default (understand multi-agent flows).

For Platform Teams

Horizontal scaling (stateless control plane). Fair resource allocation (queue limits and backpressure). Standard ops patterns (metrics, health checks, traces).

For Compliance/Security

Cryptographic audit trails (DIDs + VCs). Offline verification (export VC chains). Complete provenance (who executed what, when, why).

The Philosophy

Traditional agent frameworks optimize for single-app development. Agentfield optimizes for distributed production systems.

We chose Go for the control plane because boring infrastructure should be fast, reliable, and cheap to run. We chose Python for agent nodes because that's where AI developers already work.

We separate concerns so teams can own their agents, deploy independently, and share state automatically. We make identity automatic so compliance teams get proofs, not promises.

This is infrastructure for autonomous software

Build on it with confidence.

Deploy to Production

The architecture you just learned about works identically from local development to enterprise Kubernetes:

Why This Architecture Wins

One dependency. Zero coordination overhead. Team autonomy. Cost savings. Deploy anywhere.

Deployment Overview

Deep dive: How stateless control plane enables infinite horizontal scaling, independent agent deployment, and zero infrastructure tax

Local Development

Start with af server on localhost. BoltDB embedded, zero config.

Kubernetes

Enterprise-grade orchestration with HPA, multi-region, and security policies

Next: Building Your First Agent →

Ready to see this in action? The 5-minute quick start shows you exactly how these pieces work together.